DRIVE project introduces a novel method for users to manipulate virtual content, such as AR objects, with their bare hands in the space behind a display. This approach allows to bridges the gap between physical and virtual spaces.

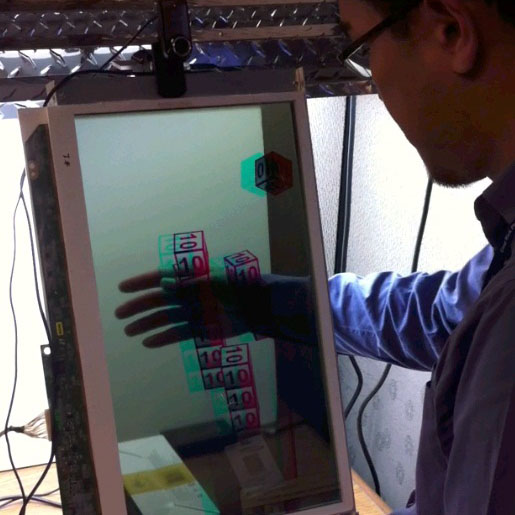

Multiple prototypes were designed and constructed to explore this concept. These include video see-through versions, which utilize a tablet with an inline camera and sensors in the back, as well as optical see-through versions that employ a transparent LCD panel with a depth sensor behind the device and a front-facing imager.

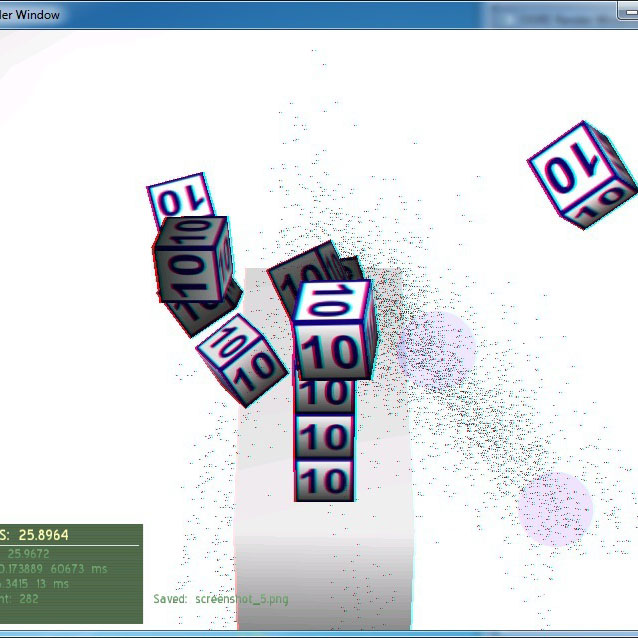

The optical see-through system incorporates several advanced features to create a compelling user experience. Anaglyphic stereoscopic rendering makes objects appear truly behind the device, enhancing depth perception. Real-time face tracking enables view-dependent rendering, allowing virtual content to "stick" to the real world as the user moves. Advanced hand tracking, powered by a time-of-flight depth camera, enables bare-hand manipulation without the need for markers or gloves. The system also incorporates virtual physics effects, allowing for completely intuitive interaction with 3D content.

At the heart of this system lies a sophisticated technology stack. OpenCV handles vision processing and face tracking, while Ogre3D takes care of graphics rendering. Early prototypes utilized a Samsung 22-inch transparent display panel, and finger tracking is accomplished using a PMD CamBoard depth camera, which offers closer range capabilities than a Kinect sensor. The system tracks face in real-time at 30 frames per second and hand detection and localization is achieved in just 22 milliseconds. This rapid response time is crucial for maintaining the illusion of direct manipulation of virtual objects.

The Behind-Display 3D Interaction System has already garnered significant interest within Samsung. A demonstration of the prototype was presented to a large audience at the Samsung Tech Fair in 2010, showcasing the potential of this technology. Following this successful presentation, patent applications were filed to protect the innovative aspects of the system.

The applications for this technology are diverse and far-reaching. In the gaming sector, it could enable direct interaction with game content and characters, creating more immersive experiences. Virtual worlds could benefit from more natural interactions between real people and avatars. The system also has potential in telepresence applications, where it could combine local and remote video with computer-generated content. In the realm of design and manufacturing, CAD/CAM processes could be enhanced through direct manipulation of 3D models. The medical field could also benefit, with the system facilitating navigation and manipulation of 3D medical image content. As development continues, smaller versions of the system are being envisioned. These could potentially be handheld devices rather than mounted to a frame, further increasing the portability and versatility of the technology.

Video

This video shows a research prototype system that demonstrates a behind display interaction method. Anaglyph based 3D rendering (colored glasses) and face tracking for view dependent rendering creates the illusion of dices sitting on top of a physical box. A depth camera is pointed at the users hands behind the display, creating a 3D model of the hand. Hand and virtual objects interact using a physics engine. The system allows users to interact seamlessly with both real and virtual objects.

This video, together with a short paper, was presented at the MVA 2011 conference (12th IAPR Conference on Machine Vision Applications, Nara, Japan, June 13-15, 2011).

Please note that this video is not 3D itself: the virtual content appears to the glasses wearing user as behind the display, where (from his perspective) they sit on a physical box.

Publications

-

DRIVE: Directly Reaching Into Virtual Environment With Bare Hand Manipulation Behind Mobile Display

Seung Wook Kim, Anton Treskunov, Stefan Marti (2011)

IEEE Symposium on 3D User Interfaces 2011, 19-20 March, Singapore [Poster] 107-108

-

Range Camera for Simple behind Display Interaction

Anton Treskunov, Seung Wook Kim, Stefan Marti (2011)

Proceedings of MVA 2011 IAPR Conference on Machine Vision Applications (June 13-15, 2011, Nara, Japan) 160-163